I got the below joke from my friend. Its very funny about co-relating Disk Drives with Girls.

HARD-DISK woman: She remembers everything, FOREVER.

RAM woman: She forgets about you, the moment you turn her off.

INTERNET woman: Difficult to access.

SERVER woman: Always busy when you need her.

CD-ROM woman: She is always faster and faster.

EMAIL woman: Every ten things she says, eight are nonsense.

VIRUS woman: Also called “wife”; when you are not expecting her, she comes, installs herself and uses all your resources. If you try to uninstall her you will lose something, if you don’t you will lose everything!!

njoy..

Thursday, December 24, 2009

When you co-relate Disk Drives with Girls - Funny Joke

Case Sensitive Search on a Case Insensitive SQL Server

In this post i would like to show you, how to implement case sensitive search on

a case insensitive SQL Server column.

First let me create a temp table:

--------------------------------

create table #EMP

(ID int, Name VARCHAR(30));

Insert into #EMP

Select 1, 'vijaya kadiyala' UNION ALL

Select 2, 'Vijaya Kadiyala' UNION ALL

Select 3, 'VIJAYA KADIYALA';

Now run the below query:

SELECT * FROM #EMP WHERE Name = 'VIJAYA KADIYALA'

This query retrieves all the records as shown in below figure:

The string literal what i passed to the query is in Upper case and i am expecting only the row which satisfies this condition. But by default SQL Server Collation attribute is Case insensitive. So SQL Server ignores the case of the string literal.

You can find the collation information by invoking the following procedure.

SELECT DATABASEPROPERTYEX('AdventureWorks2008', 'Collation')

The default collation property value is SQL_Latin1_General_CP1_CI_AS. The text which is Highlighted in green color indicates Case Insensitive.

Now to convert the query into case sensitive query, just add collation property as shown below.

SELECT * FROM #EMP WHERE Name = 'VIJAYA KADIYALA' COLLATE Latin1_General_CS_AS

Wednesday, December 23, 2009

Get Database Traffic Report in SQL Server

My Database team is working on, to find the database traffic on the server. The database is heavily used by the Online appllication and it is 24/7. We would like to see when the database is heavily loaded and see if there are any processes blocking each other which results in slower performance. One option which came to my mind is setting up a server side trace and capture rpc complete and sql batch complete events along with other events. So we decided to run the trace for 1 week during the first week of the month and 1 week during middle of the month and last week of the month because we are very sure that this is the time where the system is heavily loaded.

During this preriod of time i happen to read one article from technet. With the help of this article you can find the follwwing information:

- Determine who's connected to your SQL Server

- Dealing with blocked processes

- Performance killers

- Clues to SQL Server performance problems

Get A Traffic Report: Analyze Your Database Usage With System Tables

Why Upgrade to SQL Server 2008?

With the release of the most recent version of SQL Server, Microsoft has delivered a Trusted, Productive and Intelligent Enterprise Data Platform. There are enhancements and new capabilities engineered into SQL Server 2008 to enable your applications to run better and reduce the amount of time you need to spend managing them. If you have been running existing applications on SQL Server 2000 or 2005, you will find a set of exciting new capabilities to improve your applications and reduce support needs within a familiar management interface. Many of these new features can provide immediate benefits without the need to make application changes.

Key reasons to upgrade to SQL Server 2008

Experience the benefits right away - Significant enhancements ranging from Data and Backup compression to query performance and enhanced database mirroring are available without the need to modify your existing applications.

Enhanced security and auditing – Get data encryption and database auditing capabilities within existing applications.

Improved system management capabilities - Features like policy based server management and new tools such as Performance Data Collection help you effectively manage the growth of your data.

Performance Enhancements – There have been many performance enhancements made throughout the technology stack, including enhancements within Analysis Services, Reporting Services and Integration Services. For example Unisys and Microsoft set a new ETL performance record by loading one terabyte of data in less than 30 minutes using SQL Server 2008 Integration Services. Read about additional performance records.

Predictable System Response – New Features such as query governor and data compression along with general scalability enhancements provide scalable solutions that are more reliable for very large enterprise systems.

Developer productivity - Tools like Entity Framework and LINQ, and new date/time, FILESTREAM and spatial datatypes provide powerful and easy to use application development enhancements.

Enhanced Business Intelligence capabilities – additional reporting capabilities integrated with Microsoft Office applications and a new report designer application allows the creation of enterprise reports without the need for Developer Studio.

Application Compatibility & ease of Migration - There are upgrade tools available from Microsoft to help manage your upgrade from prior versions. Compatibility has been maintained with the majority of functionality which should enable most applications to upgrade seamlessly. Learn more about all the system changes from the prior version, and get started with infrastructure assessment and planning for upgrade to SQL Server 2008.

Mainstream support – With the latest version of SQL Server you can benefit from a long term and current support path. As of April 2008 SQL Server 2000 has moved off Mainstream support to an extended support path.

Consistent pricing and support – Microsoft continues its pricing policies of SQL Server 2005 with some additional improvements. In addition, by participating in Microsoft’s Software Assurance program you are eligible for product upgrades, support and other benefits.

Referece : http://www.microsoft.com/sqlserver/2008/en/us/why-upgrade.aspxTuesday, December 22, 2009

Print from the DataGrid view

I see one question in almost every technical forum. which is how to print the grid view data.

The DataGridView control in .NET is an amazing data representation control, and contains many advanced features that we could benefit from.

Please check out the below link which will give you very detailed information:

http://www.codeproject.com/KB/printing/datagridviewprinter.aspx

Convert PDF to Image Using Ghostscript API

In this post i would like to reference one link to generate image from PDF using external APIs.

You will need at least GhostScript 8.64 (other versions have a problem with Vista). After you have installed the program, just copy the gs32dll.dll from the installation directory (the bin subdirectory) to the directory where you have the EXE of my program.

Check out the below link for complete information:

http://www.codeproject.com/KB/cs/GhostScriptUseWithCSharp.aspx

Convert Rows to Columns with aggregate functions

create table #Marks

(ID int, Sub int, Value Int)

Select 1, 1, 10 Union all

Select 1, 2, 20 Union all

Select 1, 3, 25 Union all

Select 1, 4, 50 Union all

Select 2, 1, 15 Union all

Select 2, 2, 25 Union all

Select 2, 3, 30 Union all

Select 2, 4, 49

SELECT Id,

MAX(CASE WHEN Sub = 1 THEN Value ELSE '' END) AS Subject1,

MAX(CASE WHEN Sub = 2 THEN Value ELSE '' END) AS Subject2,

MAX(CASE WHEN Sub = 3 THEN Value ELSE '' END) AS Subject3,

MAX(CASE WHEN Sub = 4 THEN Value ELSE '' END) AS Subject4

FROM #Marks

GROUP BY Id

Monday, December 21, 2009

Qwitter and Twitter based on Newton's Third Law

Now a days Twitter is very famous. Every one says Just follow me on Twitter....

As per Newton's Third Law

For every action, there is an equal and opposite reaction.

When some one is following you on twitter you will get a notification. When some one is leaving you can even get the notification using Qwitter.

Qwitter e-mails you when someone stops following you on Twitter.

http://useqwitter.com/

isn't this kool??

Select the nth best amount with simple query

In this post i would like to show you a simple code, which is used to find the nth best amount with out using any RANK or MAX funcions.

Code is simple and straight forward. You just have to pass the value to the variable @N. If you want to find the TOP amount or highest price of any given product the you need to pass value 1 to the variable @N.

This code works on AdventureWorks2008 database.

DECLARE @N smallint

SET @N = 1

Select distinct PP_o.StandardCost

from Production.Product PP_o where (@N-1) = (select count(distinct(PP_i.StandardCost))

from Production.Product PP_i

WHERE PP_o.StandardCost < PP_i.StandardCost)

Reference: www.DotNetVJ.com

Custom Pagging in SQL Server using ROW_NUMBER Function

This is simple post to implement the pagination in SQL Server using Analytical functions.

-- Variable Declaration

DECLARE @PageLimit smallint, @CurrentPageNumber smallint

SET @PageLimit = 10 -- Setting Page Limit to 10

SET @CurrentPageNumber = 10 -- Current Page Number

DECLARE @StartRow INT

DECLARE @EndRow INT

-- Calculating the starting row

SET @StartRow = ((@CurrentPageNumber-1) * @PageLimit)

-- Calculation the Ending row

SET @EndRow = @StartRow + @PageLimit

-- Query to get the actual records based on Order by Clase

SELECT * FROM

(

SELECT ProductID,

Name,

ProductNumber,

ROW_NUMBER()OVER(ORDER BY Name) AS RowNumber

FROM Production.Product

) v

WHERE v.RowNumber > @StartRow AND v.RowNumber <= @EndRow

Friday, December 18, 2009

SQL Server Vs Oracle

My friend Pinal Dave (http://blog.sqlauthority.com/) posted one very good article on "Differences in Vulnerability between Oracle and SQL Server". This is a kinid of topic which will never end.

Its a very good topic and each one one us has different opinion and also every one should take this in +ve mode.

I will not say which one is better here. Coz each of these are best on their own.

Based on my consulting exprience in US and UK, Database servers are decided based on the following factors:

1) The application which is used to access the tables. If the front-end application is in .net or any of the microsoft products then default database server is SQL Server. If the front-end application is in Java then default database server is oracle.

2) selecting database server is entirely based on the big picture of enterprise architecture. if the firm is using SQL Server right from the begining then they preffer to go with SQL Server than changing the whole architecture.

I know these are not the only factors but these are the two answers i get normally when i review the architecture.

On the other-hand, what i have seen now a days, people are looking more towards vertica or Sybase IQ or Netezza. which are mainly for datawarehouse applications. So i think in the next 2 to 3 years there would be a huge changes to various database architectures.

Wednesday, October 14, 2009

Microsoft Most Valuable Professional Award - 2009 - SQL Server Architecture

Dear Vijay Krishna Kadiyala,

Congratulations! We are pleased to present you with the 2009 Microsoft® MVP Award! This award is given to exceptional technical community leaders who actively share their high quality, real world expertise with others. We appreciate your outstanding contributions in SQL Server technical communities during the past year.

Also in this email:

About your MVP Award Gift

How to access http://www.mvpaward.com to get your technical subscription benefits

Your MVP Identification Number

MVP Award Program Code of Conduct

The Microsoft MVP Award provides us the unique opportunity to celebrate and honor your significant contributions and say "Thank you for your technical leadership."

Toby Richards

General Manager

Community & Online Support

Monday, September 7, 2009

Mindcracker Most Valuable Professional Award - 2009

Just got an award from one of the most popular Technical community...

Dear Vijaya,

Congratulations! You have received the Mindcracker Most Valuable Professional Award for 2009. This award is given for your extra ordinary contributions on C# Corner and Mindcracker Network web sites.

Thank you for your valuable contributions and time. We really appreciate it.

Please send us your latest shipping address (day time) and phone number and we will ship you the prizes.

Thank you again for being a part of most popular community for .NET developers.

Cheers!

Praveen Kumar

Editorial Director

Mindcracker Network

Tuesday, August 25, 2009

Implementation of NOT IN operator with out using NOT IN....

One question that people always ask is “Is there any other way to do it”. Even if you give all the possible solutions to a problem still the same question arises.

If some one is asking you a question, I have a problem with my SQL query using “NOT IN” operator. It is taking long time to run. Can you give me alternative solution to the “NOT IN”? This is very generic question and the best answer to this kind of question is "It Depends".

I am sure the question looks very familiar to most of us. In this post I am going to provide different ways to implement “NOT IN” operator. Although it’s going to be extremely difficult to say which one is better? It completely depends on the situation and need.

Using NOT IN Operator

==================

SELECT ProductID,

Name,

ProductNumber,

SellStartDate

FROM Production.Product

WHERE ProductID NOT IN (1, 3, 4, 10)

Using Logical Operators

===================

SELECT ProductID,

Name,

ProductNumber,

SellStartDate

FROM Production.Product

WHERE ProductID != 1 AND ProductID != 3

AND ProductID != 4 AND ProductID != 10

Using NOT EXISTS Operator with UNION ALL

==================================

SELECT p.ProductID,

p.Name,

p.ProductNumber,

p.SellStartDate

FROM Production.Product p

WHERE NOT EXISTS( SELECT * FROM (

SELECT 1 V UNION ALL

SELECT 3 UNION ALL

SELECT 4 UNION ALL

SELECT 10 ) z

where z.v = p.ProductID )

Using Left Outer Join or Left Join

=========================

SELECT p.ProductID,

p.Name,

p.ProductNumber,

p.SellStartDate

FROM Production.Product p

left join

(

SELECT 1 V UNION ALL

SELECT 3 UNION ALL

SELECT 4 UNION ALL

SELECT 10 ) z

on z.v = p.ProductID

WHERE z.v IS NULL

Tuesday, July 28, 2009

Database Answers To Real Life Questions -- Creative Joke

Q. What if your Dad loses his car keys ?

A. 'Parent keys not found !'

Q. What if your old girl friend spots you with your new one ?

A. 'Duplicate value on index !'

Q. What if the golf ball doesn't get into the hole at all ?

A. 'Value larger than specified precision !'

Q. What if you try to have fun with somebody else's girlfriend and get kicked out ?

A. 'Insufficient privileges on the specified object!'

Q. What if you don't get any response from the girl next door ?

A. 'No data found !' or 'Query caused no rows retrieved !'

Q. What if you get response from the girl next door and her Mom too ?

A. 'SELECT INTO returns too many rows !'

Q. What if you dial a wrong number ?

A. 'Invalid number' or ' Object doesn't exist !'

Q. What if you try to beat your own trumpet ?

A. 'Object is found mutating !'

Q. What if you are too late to office and the boss catches you ?

A. 'Discrete transaction failed !'

Q. What if you see 'theatre full' when you go to a movie ?

A. 'Maximum number of users exceeded !'

Q. What if you don't get table in the lunch room ?

A. 'System out of space !'

Parameter Sniffing & Stored Procedures Execution Plan

"Parameter sniffing" refers to a process whereby SQL Server's execution environment "sniffs" the current parameter values during compilation or recompilation, and passes it along to the query optimizer so that they can be used to generate potentially faster query execution plans. The word "current" refers to the parameter values present in the statement call that caused a compilation or a recompilation.

Check out the complete article about Parameter sniffing

Thursday, July 23, 2009

Columnar Database - New Tech in BI space

I came accross very interesting database design to hold huge amounts of data. It's not a traditional RDBMS system. It's totally different.

A column-oriented DBMS is a database management system (DBMS) which stores its content by column rather than by row. This has advantages for databases such as data warehouses and library catalogues, where aggregates are computed over large numbers of similar data items. This approach is contrasted with row-oriented databases and with correlation databases, which use a value-based storage structure.

Check out the basics of Columnar database

Few points about this database from MVP and SQLServerBible Author, Paul Nielsen

Friday, July 17, 2009

Why We should Use EXISTS instead of DISTINCT to improve Query Performance

Today, I am going to give you a tip to improve the query performance when you are using DISTINCT to eliminate duplicate records.

My team member wants to find all the department names where each department has at least one employee.

So his query is:

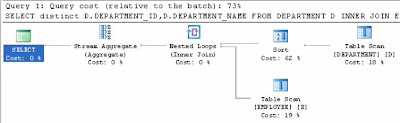

SELECT distinct D.DEPARTMENT_ID,D.DEPARTMENT_NAME

FROM DEPARTMENT D INNER JOIN EMPLOYEE E

ON D.DEPARTMENT_ID = E.DEPARTMENT_ID

This is Ok where my team member is joining Employee and Department table and then taking distinct department Names and IDs.

Lets look at the execution plan:

Estimated Sub Tree Cost : 0.0182019

Query Cost : 73%

My Query is:

When I look at the requirement little bit close, the requrement says If the department has atleast one employee then return the department Name. The perfect solution which satisfies this requirement is with EXISTS.

So I modified the query with the EXISTS clause.

SELECT D.DEPARTMENT_ID,D.DEPARTMENT_NAME

FROM DEPARTMENT D WHERE EXISTS (SELECT 1 FROM EMPLOYEE E WHERE D.DEPARTMENT_ID = E.DEPARTMENT_ID)

Lets look at the execution plan:

Estimated Sub Tree Cost : 0.0067844

Query Cost : 27%

As you can see from the results there is signifact of difference in the Query Cost.

So use EXISTS instead of DISTINCT. Also it doesn't mean that it EXISTS can be used at all the places where you have DISTINCT. This basically on case by case.

Thursday, July 16, 2009

Processing Order of SELECT Statement

In my previous article I talked about what happens when you issue INSERT statement. In this article I am going to talk about Processing order of a SELECT statement.

1. FROM

2. ON

3. JOIN

4. WHERE

5. GROUP BY

6. HAVING

7. SELECT

8. DISTINCT

9. ORDER BY

10. TOP

Tuesday, July 14, 2009

Enable SQL Full-text Filter daemon Launcher Process

In this post I would like to give you simple tip to enable SQL Full-text Filter daemon Launcher (MSSQLSERVER):

This navigation is based on XP:

1) Click Start Menu

2) Go to All Programs

3) Go to Microsoft SQL Server 2008

4) Go to Configuration Tools

5) Click on SQL Server Configuration Manager

6) Click on SQL Server Services in the Left Pane.

7) On the Right hand side you should see a row with the name SQL Full-text Filter Daemon Launcher (MSSQLSERVER).

8) Right Click on this and go to Properties

9) Go to Service tab

10) Change the start mode to Automatic.

11) Go to Logon and Click Start button to start.

That’s it.

How to find whether Full Text is Installed or not?

In this post I would like to give you simple tip to find out whether Full Text is installed or not.

Just run the below query in SQL Server Management Studio.

SELECT FULLTEXTSERVICEPROPERTY('IsFulltextInstalled')

Or

SELECT SERVERPROPERTY('IsFullTextInstalled')

If the above query returns value 1, that means Full text is installed.

Why we should avoid * in SELECT Clause to improve query performance

Why SQL Server experts say don’t use * in SELECT clause. Always use the columns what you need. In this article I am going to list out the points with examples on why we should avoid * in the SELECT clause.

Let’s create a table with 100K records. In my case I created VJ_TEST table based sys.sysmessages using SELECT statement. To test the performance of the query you really need a big table.

Step 1: Creating a test table

select * into VJ_TEST from sys.sysmessages

From this table, I need Error,Description columns to my application based on Severity column. i.e. When ever I query this table I always use severity column.

So I created a Covering index on these columns.

Step 2: Creating Covering Index

CREATE NONCLUSTERED INDEX IDX_VJ_TEST ON VJ_TEST(SEVERITY) INCLUDE (Error,description)

Step 3A: Query the table using * and see the execution plan.

SELECT * from VJ_TEST WHERE SEVERITY = 24

Estimated CPU Cost is 0.0004598

Estimated SubTree Cost is 0.356421

Though this query is using Index to filter the records but it is still scaning the table to get all the columns.

Step 3B: Query the table using Columns and see the execution plan.

SELECT Error,description from VJ_TEST WHERE SEVERITY = 24

Estimated CPU Cost is 0.000278

Estimated SubTree Cost is 0.0048638

As you can see by specifying the columns in the where clause it is going for Index Seek.

So based on this excersise we can say using columns in where clause always faster than using *.

Point 1: Always use the required Columns in the SELECT clause

Point 2: If you are transfering less data over the network then it will ocupy less bandwidth and faster to send.

Point 3: From look and feel point of view it would be very good and easy to understand.

Monday, July 13, 2009

Could not open a connection to SQL Server

Today, I was trying to connect to SQL Server and I got the below message. I don’t know what went wrong I restarted my machine.

Error:

A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible. Verify that the instance name is correct and that SQL Server is configured to allow remote connections. (provider: Shared Memory Provider, error: 40 - Could not open a connection to SQL Server) (Microsoft SQL Server, Error: 2)

Solution:

I did little bit of R&D around this. What I am trying to do is using SSMS connecting to the SQL Server. SSMS is a front-end tool for SQL operations. And the error message shows that "Could not open a connection to SQL Server".

So I thought SQL Server service is not running. So I opened SQL Server Configuration wizard to see if I am right or wrong. Yes I am right. SQL Server service is stopped. I restarted the service and now everything looks fine.

Thursday, July 9, 2009

What to do when SELECT query is Running Slowly?

In this post, i would like to give you 10 simple tips to follow when query is running slowly.

1) Review objects involved in the Query.

-- 1.1) Make sure you dont have any other columns in the SELECT clause which are not used any where in the application or in any other code.

-- 1.2) Make sure you are not using * to retrieve the columns from the table when you are not interested in all the columns.

2) Close look at the tables and their structures.

-- 2.1) Gather the No.Of Records in the table and Access pattern of the table.

-- 2.2) Make sure the table is partitioned if it holds huge amount of data.

-- 2.3) Gather the Indexes information on these tables.

-- 2.4) Make sure you are using Alias to the tables, if you have too many tables in FROM clause.

3) Very Close look at the Joining conditions in WHERE clause.

-- 3.1) Verify the joining conditions between the tables.

-- 3.2) For N tables in FROM clause you should have atleast N-1 where conditions.

-- 3.3) Make sure your query is not producing cartesian product.

4) Check on the Joining columns for data type conversions.

-- 4.1) Make sure you are not comparing Numbes with Char. etc. If you are doing this then there is an issue in the Table design. If you can't alter the column the try to denormalize the table. which means have duplicate information. If this takes less time to implement.

5) Check on the Joining columns for any functions

-- 5.1) Make sure you are not using any functions on the Joining columns. Its important to note that if you use any functions on the indexed columns in WHERE clause then engine will not use the Index to fetch or locate the data.

6) Check these Joining columns against Indexes on the table and Order of the columns in the Index.

-- 6.1) Make sure the order of the columns in WHERE clause should match with the Order of the columns in the Index.

7) Look at the Actual Execution Plan and see if there is anything strange.

-- 7.1) Look at the execution plan and take necessary actions like adding hint or creating a covering Index or Indexed views or make use of the temp table to break the logic of the query critieria.

8) Check whether statistics are up to date.

-- 8.1) This is very important step. If the stats are not up to date then this can lead the engine to take wrong path to locate the data and taking wrong path means that full scan is made when an index scan would have been appropriate.

9) Run the trace to find more information around the query.

-- 9.1) SQL Server Profiler shows how SQL Server resolves queries internally. SQL:BatchCompleted event and the RPC:CompletedEvent events should capture performance for all your SQL batches and stored procedures. The main attributes in trace are Reads, Writes, Duration, CPU, EndTime.

10) Run the Query using Database Engine Tuning Advisor.

-- 10.1) Database Engine Tuning Advisor is used examine how queries are processed in the databases and then it recommends how you can improve query performance by providing tips on having additional indexes.

This article is mainly to give general tips and these are very common tips. There are no hard and fast rules to solve Query performance issues. Its completly based on the queries and the requirement and most importantly, it is specific to the environment.

Hopefully i can come up with an article with all the measures.

Wednesday, July 8, 2009

Find out the objects on which the view depends either directly or indirectly

I would like to give you a simple query to find out objects where view depends on either directly or in-directly.

so Lets create few tables and views to test the query.

-- To Create EMPLOYEE Table

CREATE TABLE [dbo].[EMPLOYEE](

[EMP_ID] [int] IDENTITY(1,1) NOT NULL,

[FIRST_NAME] [varchar](30) NULL,

[MIDLE_NAME] [varchar](30) NULL,

[LAST_NAME] [varchar](30) NULL,

[SALARY] [bigint] NULL,

[DEPARTMENT_ID] [int] NULL

)

-- To Create DEPARTMENT table

CREATE TABLE [dbo].[DEPARTMENT](

[DEPARTMENT_ID] [int] IDENTITY(10,10) NOT NULL,

[DEPARTMENT_NAME] [varchar](30) NULL

)

-- Test View 1

CREATE VIEW V_Emp_Info as

SELECT E.*,( SELECT D.DEPARTMENT_NAME FROM DEPARTMENT D WHERE D.DEPARTMENT_ID = E.DEPARTMENT_ID ) AS DEPT_NAME

FROM EMPLOYEE E

-- Test View 2

CREATE VIEW V_EMP_TEST AS

SELECT V.* FROM EMPLOYEE E, V_EMP_INFO V

WHERE E.FIRST_NAME = V.FIRST_NAME

-- Query to get all the objects on this this view depends either directly or indirectly.

WITH Depends_CTE AS (

SELECT VIEW_SCHEMA,TABLE_CATALOG,TABLE_SCHEMA,TABLE_NAME ,VIEW_NAME, CAST('Directly' AS VARCHAR(30)) as How

FROM Information_Schema.View_Table_Usage

WHERE VIEW_NAME = 'V_EMP_TEST'

UNION ALL

SELECT e.VIEW_SCHEMA,e.TABLE_CATALOG,e.TABLE_SCHEMA,e.TABLE_NAME ,e.VIEW_NAME,CAST('In-Directly' AS VARCHAR(30)) AS HOW

FROM Information_Schema.View_Table_Usage e

INNER JOIN Depends_CTE d ON e.VIEW_NAME = d.TABLE_NAME

)

SELECT *

FROM Depends_CTE

Just pass the view name to the query.

This implementation is using Recursive CTEs (Common Table Expressions).

Tuesday, July 7, 2009

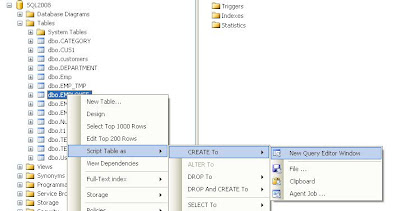

Generate DDL of a Table Using SQL Server Management Studio

>>>> Open and Login into SQL Server Management Studio (SSMS)

>>>> Choose the database in the Object Explorer

>>>> Expand the Tables section

>>>> Right Click on the Table Name and Script Table As > CREATE to > New Query Editor Window. (Please look at the below picture).

This will open a new window with CREATE Table statement.

Date Format using CONVERT function in SQL Server

Today, i would like to list out various styles of date display formats. These are very helpful for the Front-end application programmers where they want to display different date formats.

Various date style formats can be produced with CONVERT function. CONVERT function need 3 parameters.

CONVERT ( data_type [ ( length ) ] , expression [ , style ] )

The first parameter is return data type, second parameter is expression, third parameter which is optional parameter defines the style.

NOTE: Do not apply CONVERT function on date column when it is Indexed.

with out waiting lets look at the various styles of date values.

The below query produces the output in MON DD YYYY HH:MIAMPM format.

SELECT CONVERT(VARCHAR(30),GETDATE(),100)

--Output (MON DD YYYY HH:MIAMPM)

--Jul 7 2009 2:19PM

The below query produces the output in MM/DD/YYYY format.

SELECT CONVERT(VARCHAR(30),GETDATE(),101)

--Output (MM/DD/YYYY)

--07/07/2009

The below query produces the output in YYYY.MM.DD format.

SELECT CONVERT(VARCHAR(30),GETDATE(),102)

--Output (YYYY.MM.DD)

--2009.07.07

The below query produces the output in DD/MM/YYYY format.

SELECT CONVERT(VARCHAR(30),GETDATE(),103)

--Output (DD/MM/YYYY)

--06/07/2009

The below query produces the output in DD.MM.YYYY format.

SELECT CONVERT(VARCHAR(30),GETDATE(),104)

--Output (DD.MM.YYYY)

--06.07.2009

The below query produces the output in DD-MM-YYYY format.

SELECT CONVERT(VARCHAR(30),GETDATE(),105)

--Output (DD-MM-YYYY)

--06-07-2009

The below query produces the output in DD MON YYYY format.

SELECT CONVERT(VARCHAR(30),GETDATE(),106)

--Output (DD MON YYYY)

--06 Jul 2009

The below query produces the output in MON DD,YYYY format.

SELECT CONVERT(VARCHAR(30),GETDATE(),107)

--Output (MON DD,YYYY)

--Jul 06, 2009

The below query produces the output in HH24:MI:SS format.

SELECT CONVERT(VARCHAR(30),GETDATE(),108)

--Output (HH24:MI:SS)

--14:24:20

The below query produces the output in MON DD YYYY HH:MI:SS:NNN AMPM format. Use 113 style to get date and time with nano seconds in AM/PM format.

SELECT CONVERT(VARCHAR(30),GETDATE(),109)

--Output (MON DD YYYY HH:MI:SS:NNN AMPM)

--Jul 7 2009 2:24:35:490PM

The below query produces the output in MM-DD-YYYY format.

SELECT CONVERT(VARCHAR(30),GETDATE(),110)

--Output (MM-DD-YYYY)

-- 07-07-2009

The below query produces the output in YYYY/MM/DD format.

SELECT CONVERT(VARCHAR(30),GETDATE(),111)

--Output (YYYY/MM/DD)

--2009/07/07

The below query produces the output in YYYYMMDD format.

SELECT CONVERT(VARCHAR(30),GETDATE(),112)

--Output (YYYYMMDD)

--20090707

The below query produces the output in MON DD YYYY HH24:MI:SS:NNN format. Use 113 style to get date and time with nano seconds in 24 Hour Format.

SELECT CONVERT(VARCHAR(30),GETDATE(),113)

--Output (MON DD YYYY HH24:MI:SS:NNN)

--07 Jul 2009 14:26:24:617

The below query produces the output in HH24:MI:SS:NNN format. Use 114 Style to extract Time part with nano seconds in 24 Hour Format.

SELECT CONVERT(VARCHAR(30),GETDATE(),114)

--Output (HH24:MI:SS:NNN)

--14:26:48:953

Monday, July 6, 2009

Information Schema Views in SQL Server

In this article, I would like to list out the SQL Server metadata tables which we need in our day-to-day work.

SELECT * FROM Information_Schema.CHECK_CONSTRAINTS

-- Contains one row for each CHECK constraint in the current database.

SELECT * FROM Information_Schema.COLUMN_DOMAIN_USAGE

-- Contains one row for each column, in the current database, that has a user-defined data type.

SELECT * FROM Information_Schema.COLUMN_PRIVILEGES

--Contains one row for each column with a privilege either granted to or by the current user in the current database

SELECT * FROM Information_Schema.COLUMNS

--Contains one row for each column accessible to the current user in the current database.

SELECT * FROM Information_Schema.CONSTRAINT_COLUMN_USAGE

--Contains one row for each column, in the current database, that has a constraint defined on it.

SELECT * FROM Information_Schema.CONSTRAINT_TABLE_USAGE

--Contains one row for each table, in the current database, that has a constraint defined on it.

SELECT * FROM Information_Schema.DOMAIN_CONSTRAINTS

--Contains one row for each user-defined data type, accessible to the current user in the current database, with a rule bound to it.

SELECT * FROM Information_Schema.DOMAINS

--Contains one row for each user-defined data type accessible to the current user in the current database.

SELECT * FROM Information_Schema.KEY_COLUMN_USAGE

--Contains one row for each column, in the current database, that is constrained as a key.

SELECT * FROM Information_Schema.PARAMETERS

--Contains one row for each parameter of a user-defined function or stored procedure accessible to the current user in the current database.

SELECT * FROM Information_Schema.REFERENTIAL_CONSTRAINTS

--Contains one row for each foreign constraint in the current database.

SELECT * FROM Information_Schema.ROUTINE_COLUMNS

--Contains one row for each column returned by the table-valued functions accessible to the current user in the current database.

SELECT * FROM Information_Schema.ROUTINES

--Contains one row for each stored procedure and function accessible to the current user in the current database.

SELECT * FROM Information_Schema.SCHEMATA

--Contains one row for each database that has permissions for the current user.

SELECT * FROM Information_Schema.TABLE_CONSTRAINTS

--Contains one row for each table constraint in the current database.

SELECT * FROM Information_Schema.TABLE_PRIVILEGES

--Contains one row for each table privilege granted to or by the current user in the current database.

SELECT * FROM Information_Schema.TABLES

--Contains one row for each table in the current database for which the current user has permissions.

SELECT * FROM Information_Schema.VIEW_COLUMN_USAGE

--Contains one row for each column, in the current database, used in a view definition.

SELECT * FROM Information_Schema.VIEW_TABLE_USAGE

--Contains one row for each table, in the current database, used in a view.

SELECT * FROM Information_Schema.VIEWS

--Contains one row for views accessible to the current user in the current database.

Reference :

Thursday, June 18, 2009

What Happens When You Issue INSERT statement

Today I was in the interview panel, and one of the panelists asked one very basic question. The question is “What happens when you execute INSERT statement on table which has Identity column, Primary Key, Check constraint, Not Null Constraint, Foreign key, Instead Of Trigger and After Trigger.” Which one gets executed first?

This question looks very simple but its bit tricky. by the time he answers the question, I was trying to find the answer to this by creating simple example.

The Order is:

1) IDENTITY Insert check

2) Not Null Constraint Validation.

3) Data Type Verification

4) Instead of trigger execution If it exists.

5) Primary key Constraint Validation

6) Check Constraint Validation

7) Foreign Key Constraint Validation

8) After Trigger Execution

Wednesday, June 17, 2009

Insert Multiple Rows with Single INSERT statement in SQL Server

Today, I am going to give you simple and efficient TIP to insert multiple records with single INSERT statement.

--Lets Create a Dummy table

CREATE TABLE EMPLOYEE(

EMP_ID INT IDENTITY(1,1),

FIRST_NAME VARCHAR(30),

MIDLE_NAME VARCHAR(30),

LAST_NAME VARCHAR(30),

SALARY BIGINT,

DEPARTMENT_ID INT

)

-- Single Insert statement with multiple Value Clauses

INSERT INTO Employee(FIRST_NAME,MIDLE_NAME,LAST_NAME,SALARY,DEPARTMENT_ID)

Values ('Vijaya','Krishna','Kadiyala',20000,10),

('Namrath',Null,'Kadiyala',40000,10),

('Glen',Null,'Jhonson',35000,20),

('Ray','Fine','Muran',21000,20)

That’s it.

If we didn’t had this feature then we had to use following techniques to do the same.

Using Multiple Insert statements:

INSERT INTO Employee(FIRST_NAME,MIDLE_NAME,LAST_NAME,SALARY,DEPARTMENT_ID)

Values ('Vijaya','Krishna','Kadiyala',20000,10);

INSERT INTO Employee(FIRST_NAME,MIDLE_NAME,LAST_NAME,SALARY,DEPARTMENT_ID)

Values ('Namrath',Null,'Kadiyala',40000,10);

INSERT INTO Employee(FIRST_NAME,MIDLE_NAME,LAST_NAME,SALARY,DEPARTMENT_ID)

Values ('Glen',Null,'Jhonson',35000,20);

INSERT INTO Employee(FIRST_NAME,MIDLE_NAME,LAST_NAME,SALARY,DEPARTMENT_ID)

Values ('Ray','Fine','Muran',21000,20);

Using SELECT statement with Union All:

INSERT INTO Employee(FIRST_NAME,MIDLE_NAME,LAST_NAME,SALARY,DEPARTMENT_ID)

SELECT 'Vijaya','Krishna','Kadiyala',20000,10

UNION ALL

SELECT 'Namrath',Null,'Kadiyala',40000,10

UNION ALL

SELECT 'Glen',Null,'Jhonson',35000,20

UNION ALL

SELECT 'Ray','Fine','Muran',21000,20

Tuesday, June 16, 2009

Filtering Sensitive Text in SQL Server

A very common concern when dealing with sensitive data such as passwords is how to make sure that such data is not exposed through traces. SQL Server can detect and filter the SQL statements in traces that include the usage of DDL and built-ins (such as OPEN SYMMETRIC KEY, and EncryptByKey) that are known to include potentially sensitive data.

Check out the complete article Filtering (obfuscating) Sensitive Text in SQL Server from Raul Garcia

Monday, June 15, 2009

Reading XML file in PowerShell

Today, I am going to talk about reading an XML file in PowerShell.

Let’s define the XML structure file:

Declare a variable to get the content of XML file. Make sure the file path. In my case file is located in H:\ Drive and also my powerShell Home directory is H:\. I would always recommend you to use Full Path of the file.

PS H:\> $EmpList = get-content Employee_details.xml

And use the path to display the XML content information in tabular format.

PS H:\> $EmpList.Employee.Row

Dept_ID Dept Dept_Mgr

------- ---- --------

1 Admin Krishna

2 Finance Kadiyala

3 HR Vijaya

4 Consulting Vijaya Kadiyala

Sunday, June 14, 2009

Powerful text editor - UltraEdit

In this article I would like to explain some of the cool features of UltraEdit v15.00 that I liked when I was working with it. This tool will significantly save your time when you are at work.

Below are the some of the features that I had explored.

Macros to do the repetitive tasks:

One very common task that we do in our day-to-day life is “Find & Replace”. If you want to perform Find & Replace of certain characters in all the files with in a directory then you need to write your own program to do it or you need to use some special DOS commands. But with UltraEdit you can write a macro to do all these for you. Creating a macro is very similar to creating it in Excel Sheet. You can also assign Short Cut key to this while creating a macro. This will definitely save lot of time if you are doing repetitive tasks. In addition to this we can also edit macros and add existing commands of UltraEdit to perform some more tasks.

Summary to see the Occurrences of the string:

You can also see the summary of the occurrences of the string in a file. If you use “Highlight All Items Found” in Find dialog box under advanced section, it will highlight the occurrence of the string in the file. In addition to this if you select the option “List Lines Containing the String” then it will display summary dialog box with the line numbers of the string in file.

Opening Browser from Your file with the selected string:

One another cool feature of this tool is to open the web browser. You can simply select the text and click on any of the Icons of Google, yahoo or WikiPedia to open web Browser and search of the selected string. This will certainly save a lot of time. This is just click away for search.

Select specific portion of the file content:

This is very interesting feature of this tool, where you can change the mode of selection to column and then you can select vertically. In addition to this, you can also apply Sum operation based on the selection.

Regular Expression to replace:

If we want to remove all the blank lines in a file with out using regular expressions, imagine how much work we need to do. If we have a regular expression option in the tool then in just few clicks you can replace all the blank lines. Any tool which provides this feature is a great tool.

Find History:

When ever you search for something in a file, it stores the search string for future use. So in this way you don’t have to remember the search string. This is also applicable to replace.

Like this, there are so many countless features available in this tool. Check out the cool tips on what you can do with this tool. Use this tool to work in a smart way…..

Friday, June 12, 2009

How to Find out the dependencies using SQL Server Management Studio

Today I would like to give you simple tip to find out all the dependencies on a table using SQL Server Management Studio.

>>>> Open and Login into SQL Server Management Studio (SSMS)>>>> Choose the database in the Object Explorer

>>>> Expand the Tables section

>>>> Right Click on the Table Name and Click on View Dependencies.

I have only two objects which depends on this table.

Interesting Observation on IntelliSense in SQL Server – Part 1

In my previous article I talked about one interesting observation on SQL Server Intellisense. Today I came across another one. I hope Microsoft is going to improve the intellisense in the next release of Service Pack or probably in the next version of SQL Server.

I am using following statement to create a new table and load the data.

SELECT * INTO EMP_TMP

FROM EMPLOYEES

And then querying data from new table, There is nothing wrong the below statement. It is just that Intellisense is not updated with the information.

SELECT * FROM EMP_TMP

If you look at the below screen shot there is red line around EMP_TMP table. When I hover the mouse on EMP_TMP it displays “Invalid Object Name ‘EMP_TMP’”.

Thursday, June 11, 2009

Most Power Full commands in PowerShell

Today I am going to talk about the 3 most power full commands you need to know to get started on PowerShell.

The three Power Full Commands are

1) get-help

2) get-command

3) get-member

get-help command displays the information about cmdlets and concepts. You can also use “help” command to get the same kind of information.

PowerShell DotNetVJ:\> Get-help get-command

This command would display short or one page description about the command. If you want more and detailed information then you need to pass “-Detailed” parameter.

PowerShell DotNetVJ:\> Get-help get-command -Detailed

The above command displays all the information related to get-command which spans in multiple and you have scroll thru to read everything. Add “ more” to limit the out put to one page and press Space key to continue.

You can also get the help about get-help command.

PowerShell DotNetVJ:\> Get-help get-help

Get-command command displays the information about cmdlets and other elements of PowerShell commands.

PowerShell DotNetVJ:\> get-command

The above command displays all the cmdlets. You can also use wild characters to limit the records.

Lets say you want to display all the cmdlets starting with “write” then simply issue the below command.

PowerShell DotNetVJ:\> get-command write*

Get-Member gets information about the members of objects. This method can accept the value from the pipeline or from inputObject parameter. Your output depends on how you are passing the input to this command.

PowerShell DotNetVJ:\> get-command get-member

PowerShell DotNetVJ:\> get-command get-member

Wednesday, June 10, 2009

An Introduction to Oslo

What is Oslo?

"Oslo" is the code name for a family of new technologies that enable data-driven model based development. First we will explore the nature of model driven development and then apply the concept of model driven development to Oslo.

Speaker:

Stephen Forte, TelerikStephen Forte is the Chief Strategy Officer of Telerik, a leading vendor in .NET components.

Date: Thursday, June 18, 2009

Time: Reception 6:00 PM , Program 6:15 PM

Location: Microsoft , 1290 Avenue of the Americas (the AXA building - bet. 51st/52nd Sts.) , 6th floor

You must register at https://www.clicktoattend.com/invitation.aspx?code=138919 in order to be admitted to the building and attend.

Reference: NYC .NET Developer Group

.Net Interview Questions

ASP.NET

ASP.Net Interview Questions - Part 1

ASP.Net Interview Questions - Part 2

ASP.Net Interview Questions - Part 3

ASP.Net Interview Questions - Part 4

ASP.Net Interview Questions - Part 5

ASP.Net Interview Questions - Part 6

ASP.Net Interview Questions - Part 7

ASP.Net Interview Questions - Part 8

ASP.Net Interview Questions - Part 9

ASP.Net Interview Questions - Part 10

ASP.Net Interview Questions - Part 11

ASP.Net Interview Questions - Part 12

C#

C# Interview Questions

Very Famous Error in PowerShell

In this post i would like to give you the solution to a very famous error in PowerShell.

File H:\WindowsPowerShell\profile.ps1 cannot be loaded because the execution of scripts is disabled on this system. Please see "get-help about_signing" for more details.At line:1 char:2+ . <<<< 'H:\\WindowsPowerShell\profile.ps1'

If you are getting the above error it means you are trying to execute some scripts (.ps1) or some configuration files (.ps1xml).

This error is related to the execution Policy of the PowerShell. Use Get-ExecutionPolicy command to find out the current execution policy.

PS H:\> get-ExecutionPolicy

Restricted

Now change the execution policy to "UnRestricted" to resolve or eliminate the error.

PS H:\> Set-ExecutionPolicy UnRestricted

PS H:\>

That's it :)

Changing Prompt And Execution Policy in PowerShell

In this article I am going to show you, how to change the prompt of your PowerShell and few lines about Execution Policy in PowerShell.

When you start the PowerShell I am getting following prompt

PS H:\>

I want to change this PS H:\> to PowerShell VJ:\>.

In order to change this first you need to understand Execution Policy. Execute the

get-ExecutionPolicy command to find out the current execution policy.

PS H:\> get-ExecutionPolicy

Restricted

PS H:\>

Execution Policy is not thing but set of rules that governs how PowerShell execute scripts on your machine.

There are 4 different execution policies in PowerShell.

Restricted:

If the Current Execution Policy is set to Restricted then You can't exeucte any scripts (.ps1) or configuration (.ps1xml) files.

AllSigned:

If the Current Execution Policy is set to AllSigned then Scripts (.ps1) and Configuration (.ps1xml) files must be digitally signed.

Remote Signed: Ps1 and .Ps1xml files from the internet must be digitally signed.

If the Current Execution Policy is set to RemoteSigned then Any Scripts (.ps1) and Configuration (.ps1xml) files which are downloaded from the internet must be digitally signed.

Unrestricted: No digital signatures are required and all scripts can be executed.

If the Current Execution Policy is set to Unrestricted then All scripts and configuration files can be executed.

There are two different ways in which you can change the execution policy.

The first method, using Set-ExecutionPolicy command and second method is using Windows Registry. I do not recommend you to modify the execution policy using Windows Registry.

Using Set-Execution Policy

PS H:\> set-executionpolicy Unrestricted

PS H:\>

What this does is, it will create an entry in the registry in the following location.

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\PowerShell\1\ShellIds\Microsoft.PowerShell

"ExecutionPolicy"="UnRestricted "

Using Registry

Goto the following location

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\PowerShell\1\ShellIds\Microsoft.PowerShell

Add new string Value If it doesn’t exists.

Name = ExecutionPolicy

Value = UnRestricted

Now lets see, how to change the prompt.

Create “WindowsPowerShell” Folder in "My Documents" Folder and create profile.ps1 file.

Put the following lines of code in that

function prompt

{

"PowerShell DotNetVJ:\> "

}

And start the PowerShell to see new the Prompt.

Windows PowerShell

Copyright (C) 2006 Microsoft Corporation. All rights reserved.

PowerShell DotNetVJ:\>

That’s it.

Tuesday, June 9, 2009

Working with Arrays and Hash Tables in PowerShell

PowerShell variables can also store arrays. Each item is assigned a unique Index number and starts with 0.

To create an array in PowerShell, create a variable and assign list of values separated by comma.

PS H:\> $MyArray = ('A','B','C','D','E','F','G','H')

To display the values just use square brackets along with the Array Index.

PS H:\> $MyArray[0]

A

You can simply type the PowerShell Variable names to display all the values in that array.

PS H:\> $MyArray

A

B

C

D

E

F

G

H

To Get the length of the array just use the Count Property of the Array.

PS H:\> $MyArray.Length

8

Updating Array

You can also change any element in array by using the Index.

PS H:\> $MyArray[4] = "VIJAY"

PS H:\> $MyArray

A

B

C

D

VIJAY

F

G

H

Adding new element to Existing Array

You can’t add new element to the existing array. You need to create new array and using + operator you can add new elements to existing array.

PS H:\> $MyNewArray = ('KADIYALA','KRISHNA')

PS H:\> $MyArray = $MyArray+$MyNewArray

PS H:\> $MyArray

A

B

C

D

VIJAY

F

G

H

KADIYALA

KRISHNA

You can also specify the range of the integers to create an array. You just have to specify ranges of integers using the .. operator

PS H:\> $MyArray = 1..10

PS H:\> $MyArray

1

2

3

4

5

6

7

8

9

10

Creating Multi Dimensional Arrays

Creating multi dimensional array is very similar to creating single dimensional arrays. You need to enclose the values in “(“and “)”.

PS H:\> $MyMultiArray = (('A','B'),('C','D'),('E','F'))

PS H:\> $MyMultiArray

A

B

C

D

E

F

PS H:\> $MyMultiArray[0]

A

B

PS H:\> $MyMultiArray[0][0]

A

PS H:\> $MyMultiArray[1][0]

C

Creating Hash Tables

Hash tables are very similar to multi dimensional arrays. The major difference between Multi dimensional and Hash tables are the Index. In Hash tables you can use your own index name to read the values.

PS H:\> $MyHash = @{'First_Name'='Vijaya'; 'Middle_Name'='Krishna'; 'Last_Name'='Kadiyala'}

PS H:\> $myHash['First_Name']

Vijaya

PS H:\> $myHash['Middle_Name']

Krishna

PS H:\> $myHash['Last_Name']

Kadiyala

PS H:\> $myHash['First_Name'] + ' ' + $myHash['Middle_Name'] + ' ' + $myHash['Last_Name']

Vijaya Krishna Kadiyala

PS H:\>

Monday, June 8, 2009

Expression in PowerShell

Additional capability of PowerShell is expressions.

Let’s look at the simple example

PS H:\> (150*15)/20

112.5

PS H:\>

One thing to note here is PowerShell displayes the values immediately. Where in other scripting languages you need to use either variable or use special commands to print the value.

You can also store the output of this expression into a variable.

PS H:\> $Result = (150*15)/20

PS H:\> $Result

112.5

PS H:\>

Friday, June 5, 2009

Windows Mobile 6.5 Developer Tool Kit

The Windows Mobile 6.5 Developer Tool Kit adds documentation, sample code, header and library files, emulator images and tools to Visual Studio that let you build applications for Windows Mobile 6.5. This document contains important information about this package. For general information about writing software for Windows Mobile, please see the Windows Mobile Developer Center. The Windows Mobile 6 SDK must also be installed in order to use any of the Windows Mobile 6.5 Gesture API or samples. Windows Mobile 6.5 Developer Tool Kit comes with the following Emulator Images:

>> Windows Mobile 6.5 Professional Square Emulator

>> Windows Mobile 6.5 Professional QVGA Emulator

>> Windows Mobile 6.5 Professional WQVGA Emulator

>> Windows Mobile 6.5 Professional VGA Emulator

>> Windows Mobile 6.5 Professional WVGA Emulator

>> Windows Mobile 6.5 Standard Square Emulator

>> Windows Mobile 6.5 Standard QVGA Emulator

Download Windows Mobile 6.5 Developer Tool Kit

Thursday, June 4, 2009

Variables in PowerShell

Why do we need a variable?

Variable is a storage place for data. In PowerShell we can store almost anything, from strings to Objects.

How do you define a Variable?

To define a variable just prefix $ to any sequence of characters. PowerShell variables are not case sensitive. Variable can have any thing, starting from (A-Z) and (0-9) and (_).

There is no length restriction to the variable name but it is always good to follow the same naming conventions, which we use in .Net or in T-SQL programming languages.

Built-in Variables

When PowerShell is started, a number of variables are defined and assigned the environmental values.

PS H:\> set-location variable:

PS Variable:\> get-childitem

There are two types of built-in variables. One type of variable stores the configuration information for the current session and another type of variable stores the personal preferences.

Make your life easy with Alias

Alias makes the life very easy. PowerShell has alias names for most of its commands. So you don’t have to remember the complete command to use. You can use the alias to perform the same operation.

Get-process is the command to get all the processes on your machine and the alias for this is gps.

To get all the aliases just use get-alias method.

Working with alias

Several alias cmdlets enable you to define new aliases, export aliases, import aliases and display existing aliases. By using the following command, you can get a list of all related alias cmdlets.

Export and Import aliases are to export and import the aliases from different sessions. Get-Alias is to get the all aliases. New and Set Aliases are to define new aliases with in current session. If you want to persist your aliases then you need to set these things in profile.ps1.

Export and Import aliases are to export and import the aliases from different sessions. Get-Alias is to get the all aliases. New and Set Aliases are to define new aliases with in current session. If you want to persist your aliases then you need to set these things in profile.ps1.

One thing to remember is you can’t use predefined alias names to your own commands.

If you try to do that then you will get below error.

PS Variable:\> set-alias gps pwd

Set-Alias : Alias is not writeable because alias gps is read-only or constant and cannot be written to.

Scope of variables

Scope is a logical boundary in PowerShell. Scopes can be defined as global, local, script, and private.

Global scope applies to an entire PowerShell instance. These variables can’t be shared between the sessions. To declare these kinds of variables just prefix the $Global: to the variable.

Local scope variables are declared inside the function. Once the processing is completed you can’t access this variable.

Script scope variables are declared inside the script. The lifetime of this variable is as long as the script is running.

Private scope is similar to Local but only difference is this value can’t be inherited. To declare these kinds of variables just prefix the $Private: to the variable.

Is TRUNCATE faster to delete the data from a table?

When I want to delete all the records from a table, the only command that comes to my mind is TRUNCATE. Is there any command that is faster than TRUNCATE to delete the data? Answer YES. Instead of issuing TRUNCATE on a table, you can simply rename the table and CREATE new table with the same structure. Drop the Old table when there is less load on the server.

When we perform RENAME or DROP operations on a table, all the objects which depends on the table gets invalidated. So use sp_recompile stored procedure to compile all the objects which depends on this table.

Wednesday, June 3, 2009

Summer 2009 MSDN “Suburban” Roadshow

Peter Laudati and Bill Zack are happy to announce that they will be kicking off a late spring/early Summer 2009 series of the MSDN “Suburban” Roadshow! There’s a tendency for Microsoft (and other companies) to host live events in NYC as it’s the center of our area.

Agenda:

Up, Up, and Away! Storage in the Microsoft Cloud

Developing on Microsoft Windows 7

Using MS AJAX & jQuery with ASP.NET

Location Infragistics Headquarters 50 Millstone Rd., Building 100 East Windsor, NJ 08520

Date & Time June 11, 2009 1:00 PM - 5:00 PM

Registration Click here to Register Or call 877.673.8368 With Event ID: 1032415493

Location Set Focus 4 Century Drive Parsippany, NJ 07054

Date & Time June 16, 2009 1:00 PM - 5:00 PM

Registration Click here to Register Or call 877.673.8368 With Event ID: 1032415492

Location Microsoft New York Office 1290 Avenue of the Americas, 6th Floor New York, NY

Date & Time June 24, 2009 1:00 PM - 5:00 PM

Registration Click here to Register Or call 877.673.8368 With Event ID: 1032415487

Reference : NYC .NET Dev Group

To Get More details on this you need to register/subscribe to NYC .Net Dev User Group.

Announcing the NYC Agile Firestarter

Are you just starting out with Agile, XP or Scrum and need to get up to speed? Or do you know a thing or two about Agile but want to learn the basics so you can implement it in your organization? Then this Firestarter is for you. We'll take you from 0 to 60 in 8 hours. Bring a laptop with Visual Studio 2008 Express edition or better for an all day hands on seminar led by some of the NY area's Agile practitioners.

Register today, space is limited!

Reference: .Net NY Dev User Group

Find Active Connections in SQL Server Using PowerShell

Today i would like to show you simple code to find the active connections in SQL Server using PowerShell. PowerShell code is very easy to write. All you need is the Class names to connect to the Database to do the required job.

In Simple Steps:

1) Load the SMO object into the memory.

2) Create a new instance of SqlConnection Object

3) Assign the connection string.

4) Open the connection.

5) Create a new instance of SqlCommand Object.

6) Build the query

7) Set the Connection to Command Object

8) Execute the Command and store the results in DataReader.

9) Use While Loop to display the information.

[System.Reflection.Assembly]::LoadWithPartialName('Microsoft.SqlServer.SMO')

$oConn = New-Object -TypeName System.Data.SqlClient.SqlConnection

$oConn.ConnectionString = "SERVER=WNYCDEF3Q9W721\VJ_LCL;Integrated Security = True"

$oConn.Open()

$Cmd = New-Object -TypeName system.data.sqlclient.sqlcommand

$sSQL = "SELECT db_name(dbid) as Database_Name, "

$sSQL = $sSQL + " count(dbid) as No_Of_Connections "

$sSQL = $sSQL + " FROM sys.sysprocesses "

$sSQL = $sSQL + " WHERE dbid > 0 "

$sSQL = $sSQL + " GROUP BY dbid "

$cmd.Connection = $oConn

$Cmd.CommandText = $sSQL

$data_reader = $Cmd.ExecuteReader()

$DB_Name

$No_Of_Conn

while ($data_reader.Read())

{

$DB_Name = $data_reader.GetString(0)

$No_Of_Conn = $data_reader.GetInt32(1)

Write-host "Database Name:" $DB_Name " " "No_Of_Connections:" $No_Of_Conn " "

}

$oConn.Close()

Interesting observation with IntelliSense in SQL Server 2008

IntelliSense is a great feature of SQL Server 2008 but some times it is giving me hard time.

Check out the below image.

When I move this statement just above to the SELECT statement then message and red line disappears.

Tuesday, June 2, 2009

Very Common SQL Server Errors And Resolutions Part 3

In this article I would like to list out most frequently occurred errors and their solutions. This article will also you tell how to reproduce them.

Error: 1

Msg 245, Level 16, State 1, Line 1

Conversion failed when converting the varchar value 'VIJAY KADIYALA' to data type int.

Cause:

This error comes into the picture when you are performing operations on CHAR column and trying to convert into INT.

How to re-produce:

SELECT 'VIJAY KADIYALA' + 2

Solution:

Explicitly convert the value 2 using CAST or CONVERT function

SELECT 'VIJAY KADIYALA' + CAST(2 AS VARCHAR(2))

Error: 2

Msg 141, Level 15, State 1, Line 4

A SELECT statement that assigns a value to a variable must not be combined with data-retrieval operations.

Cause:

This error comes into the picture when you are assigning all the column values from SELECT statement into Local variable don’t match.

How to re-produce:

DECLARE @FirstName VARCHAR(30)

DECLARE @LastName VARCHAR(30)

SELECT @FirstName = FIRST_NAME,@LastName = LAST_NAME,MIDDLE_NAME FROM EMPLOYEES

WHERE LAST_NAME = 'Kadiyala'

Solution:

Match the Local variables with the No.Of Column you ate retrieving from SELECT statement.

Error: 3

Msg 145, Level 15, State 1, Line 1

ORDER BY items must appear in the select list if SELECT DISTINCT is specified.

Cause:

This error comes into the picture when you are using the DISTINCT Clause in the SELECT statement and the column which appears in the ORDER BY doesn’t part of the SELECT clause.

How to re-produce:

SELECT DISTINCT FIRST_NAME,LAST_NAME FROM EMPLOYEES

ORDER BY MIDDLE_NAME

Solution:

When you are using DISTINCT clause make sure all the Order by Columns are also part of SELECT clause.

Error: 4

Msg 8152, Level 16, State 14, Line 1

String or binary data would be truncated.

The statement has been terminated.

Cause:

This error comes into the picture when length of the value being inserted is longer than the length of the column.

How to re-produce:

INSERT INTO EMPLOYEES(FIRST_NAME) VALUES ('12345678901234567890123456789011')

The length of FIRST_NAME column is 30 Characters only.

Solution:

Make sure you pass the values based on the column Length.

Monday, June 1, 2009

PowerShell Articles

Variables in PowerShell

In this article, I discussed about System Level variables,Environmental Variables and User Defined variables alond with the scope of the variables.

Working with Arrays and Hash Tables in PowerShell

In this article, I discussed about creating Single, Multi dimensional arrays along with Hash Tables.

Expression in PowerShell

In this article, I discussed about working with expressions.

Changing Prompt And Execution Policy in PowerShell

In this article, I discussed about Changing the Prompt and understaning the execution policies.

Most Power Full commands in PowerShell

In this article, i discussed about the very famous commands in PowerShell with out which we can't work...

Reading XML file in PowerShell

In this article, I discussed about reading an XML file in PowerShell

list out the Databases in SQL Server using PowerShell

This is a small script to list out all the databases in SQL Server using PowerShell.

Find Active Connections in SQL Server Using PowerShell

This is a small script to find out all the active connections in SQL Server using PowerShell.

PowerShell Errors and Solutions:

Very Famous Error in PowerShell

list out the Databases in SQL Server using PowerShell

Let’s look at the simple script that displays the Databases in SQL Server

PowerShell:

Step 1:

[System.Reflection.Assembly]::LoadWithPartialName('Microsoft.SqlServer.SMO') out-null

Step 2:

$sSQLServer = New-Object ('Microsoft.SqlServer.Management.Smo.Server') "VIJAY_MACHINE\VJ_LCL"

VIJAY_MACHINE => is my Machine Name

VJ_LCL => is my SQL Server Instance Name

Step 3:

$SQLServerDatabases = $sSQLServer.Databases

Step 4:

$SQLServerDatabases Select Name,RecoveryModel,Size,SpaceAvailable

Output Is

Where can I use Windows PowerShell

I found one very interesting article, which talks about Windows Power Shell. This article explains the differences between various scripting languages in layman terms.

Check out the what is powershell used for a laymans comparison article for complete information.

Windows Azure Tools for Microsoft Visual Studio May 2009 CTP

Windows Azure Tools for Microsoft Visual Studio extend Visual Studio 2008 and Visual Studio 2010 Beta 1 to enable the creation, building, debugging, running and packaging of scalable web applications and services on Windows Azure. Installation includes the Windows Azure SDK.

New for the May 2009 CTP:

>> Support for Visual Studio 2010 Beta 1

>> Update for Visual Studio 2008

>> Improved integration with the Development Fabric and Storage services to improve the reliability of debug and run of Cloud Services from Visual Studio

>> Enhanced robustness and stability

Check out and download the complete information from Microsoft Site.

Bing is Live......

Microsoft's much awaited Search engine is on......My first comment,it looks so great and the features which i like are

1) Additional Information in the search results

2) 3D view of the maps

3) Bird's eye ...

4) Collections... in the Maps...

5) Sharing the Map Location and can also blog it...

6) Add a Stop in between Source and destinations in Maps..

7) Showing the directions based on Shortest Path and Shortest Distance....

Keep watching it's going to rock...

Need to explore more....

Saturday, May 30, 2009

TSql Unit Test Generator

I found one tool which is very interesting to generate the Test Scripts for TSQL programs automatically.

The tool , which I am talking about is TSql Unit Test Generator. This Automatically generates unit tests in SQL for programs in SQL Server. The unit tests created are written in TSQL . This framework is intended to be used with the TSQLUnit framework.

Friday, May 29, 2009

60 Seconds with Michael Coles

Lets check out more about his books and his views on SQL Server 2008.

VJ: Please tell us about the motivation behind writing a book on XML?

Michael Coles: Initially I needed a reference book for SQL Server 2005’s new XML functionality (when it was first introduced), but everything I found seemed to be about the backwards-compatible SQL Server 2000 functionality that was carried forward. I think the lack of a reference that really covered the new features like the XML data type methods and XML Schema collections really made me decide to write Pro SQL Server 2008 XML.

VJ: There are so many features in SQL Server 2008, what do you think are the most useful for developers?

Michael Coles: (I assume this is about new features specifically). I’ve gotten a lot of good use out of a lot of the new features. It’s really hard to say what’s the “most useful” – it really depends on what you need. I’ve found the encryption functionality in SQL Server 2008 a particularly compelling feature, but it hasn’t gotten a lot of fanfare yet. The new functionality is integrated full-text search makes it a lot more useful as well. I also like the spatial data types, but it’s such specific functionality that I don’t know how many developers will get the opportunity to take advantage of it directly, without some sort of off-the-shelf geospatial/mapping software sitting there to use it.

VJ: Please tell us the advantages of storing data in XML in comparison with relational database?

Michael Coles: Now that’s a trick question :) There’s not really an “advantage” to storing XML versus relational form. What happens is people have requirements to store XML data they may receive from other sources, they may have a requirement to use XML columnsets for a more dynamic database, or they may just need to parse and process XML data.

There are a lot of DBAs who flatly reject XML, and in many cases they’re right. But XML is the lingua franca of the internet and at some point developers and DBAs will have to deal with it. To me some of SQL Server’s most compelling XML features are XQuery support, the FOR XML clause, and the shredding (converting XML to relational format) support of the XML data type. In other words a lot of SQL Server’s best XML features deal with processing XML on the server.

VJ: You are Microsoft Most Valuable Professional, what do you think about this program.

Michael Coles: It’s a very humbling experience. You get to meet some of the smartest SQL people on the planet – folks like Louis Davidson, Adam Machanic, Hugo Kornelis, Erland Sommarskog, Itzik Ben-Gan (and a bunch of others). To actually meet these people you’ve looked up to for so long is incredible. To have one or two say “I enjoyed your article” or “I like your code sample” is like icing on the cake.

VJ: Please tell us about your favorite authors?

Michael Coles: Oh wow – I like so many authors. For technical writing, I really like Adam Machanic and Itzik Ben-Gan’s books, Erland Sommarskog’s incredible white papers, and Pinal Dave’s blog (sqlauthority.com). For nontechnical writing I enjoy Simon Singh and Douglas Adams.

VJ: Please tell us about the SQL Server books that are part of your bookshelf?

Michael Coles: Let’s see – as examples I have the Knight and Veerman books (Pro and Expert SQL Server 2005 Integration Services books), the Dewson Pro SQL Server 2005 Assemblies book, the entire Inside SQL Server 2005 series from Delaney and her cowriters. I usually buy SQL books for reference when I’m doing very specific tasks. When I buy a SQL book I tend to spend a lot of time looking through book indexes for keywords related to a specific problem I’m experiencing. Most of the books on my technical bookshelf are non-SQL books though, like Schneier’s Applied Cryptography and Knuth’s The Art of Computer Programming.

VJ: Please tell us about the enhancements or new features you want to see on XML in next version of SQL Server.

Michael Coles: I’d personally like to see more comprehensive support for the XQuery standard and better overall XML performance. I think over time both of these will be addressed. Keeping in mind the new generation of XML support is only 4 years old, I think it’ll get even better as the features mature and new methods of optimizing XML manipulation and queries. One of the best things I think developers can do is provide Microsoft with plenty of feedback (through the MS Connect website, via user groups, on blogs, etc.) about which XML features they’re using, which features they find most useful, and what they’d like to see improved. This kind of feedback can help get new features and improvements on the SQL Server team’s radar.

VJ: Please share your valuable message for the database Developers

Michael Coles: I think the most important thing is to share your knowledge and experiences with other developers. A lot of the tips and tricks that some developers take for granted aren’t necessarily widely known, and it helps everyone when we contribute to the community together.

VJ: Please tell us about your upcoming books

Michael Coles: Right now I’m finishing up Expert SQL Server 2008 Encryption with James Luetkehoelter. This is going to be the first book that specifically focuses on encryption technology in SQL Server and how you can leverage it to protect your corporate data.

I’m also considering writing a freely downloadable e-book—I still have to work out the details and find the time. I’ll keep you posted on that one.

I would like to thank Michael Coles for sharing his views with us....